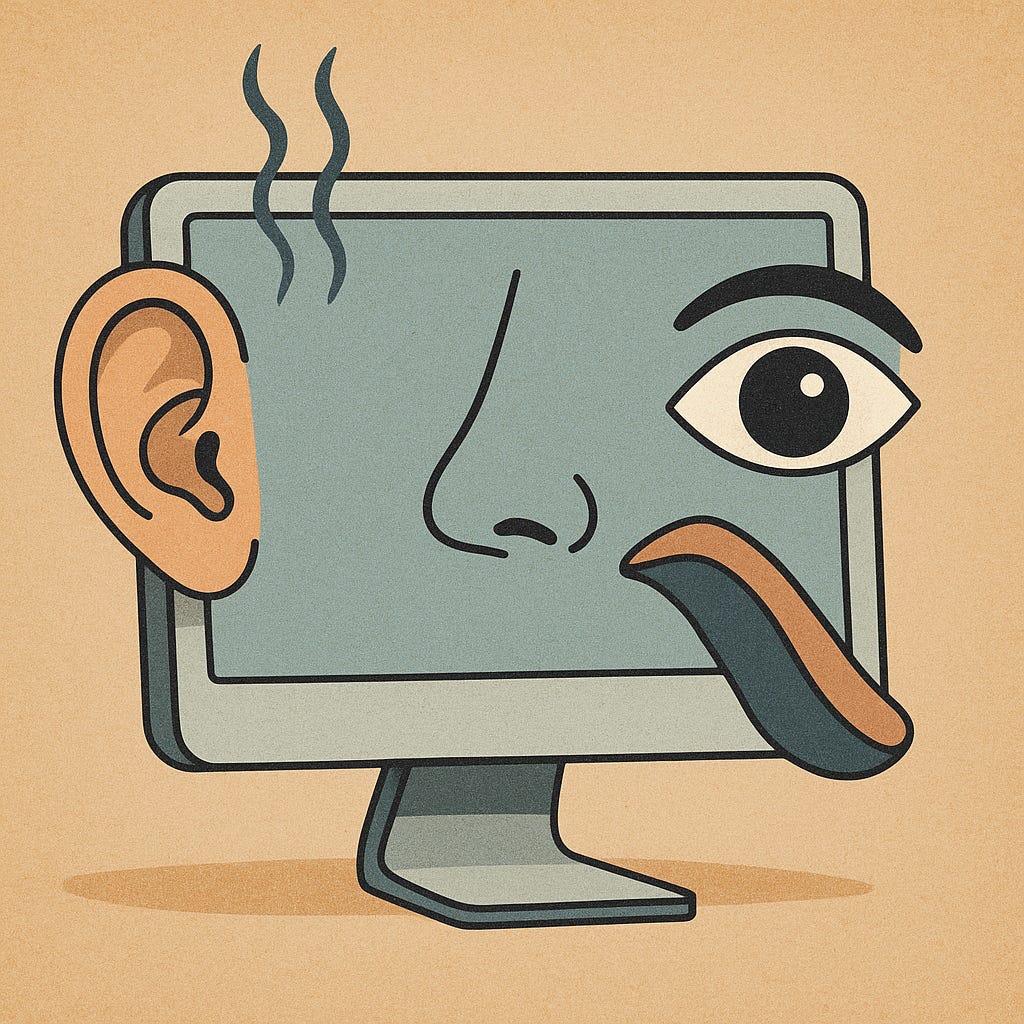

AI's Umwelt

sense-making and sense-scapes

I stumbled across this concept of an UMVELT reading David Eagleman, a neuroscientist and popular author. It's the idea that each living being experiences a distinct sensory world. Our human experience is dominated by inputs from our five senses - we live in a world of colour and sound and and smell and taste and touch. These senses also have a certain balance. Some people have a more acute sense of hearing, for example. This dominant sense informs the dominant kind of intelligence - in this example, an auditory intelligence would be most refined (in the Howard Gardner multiple intelligences framing). Our sense inputs make up our world of external experience. Our internal experience is coloured by the external in obvious ways. If you live in sound, you think in sound.

Comparing humans, the differences are important but subtle when we move across the animal kingdom. This week, I read a fascinating review of an upcoming book release. The book is ‘The Arrogant Ape' by primatologist Christine Webb. The review is linked here, by Cass Sunstein: https://democracyjournal.org/magazine/78/were-not-so-special/

For example, hummingbirds can see colors that human beings are not even able to imagine. Elephants have an astonishing sense of smell, which enables them to detect sources of water from miles away. Owls can hear the heartbeat of a mouse from a distance of 25 feet. Because of echolocation, dolphins perceive sound in three dimensions. They know what is on the inside of proximate objects; as they swim toward you, they might be able to sense your internal organs. Pronghorn antelopes can run a marathon in 40 minutes, and their vision is far better than ours. On a clear night, Webb notes, they might be able to see the rings of Saturn. We all know that there are five senses, but it’s more accurate to say that there are five human senses. Sharks can sense electric currents. Sea turtles can perceive the earth’s magnetic field, which helps them to navigate tremendous distances. Some snakes, like pythons, are able to sense thermal radiation. Scientists can give many more examples, and there’s much that they don’t yet know.

It's not only heightened senses in animals that we see, there are sense inputs we cannot conceive of. Electric currents, thermal radiation, air compression - there are myriad and mysterious ways to experience things.

This brings me to AI and our fear of sentient computers. We can't help but think in human terms of sentient things. What is the umwelt of a machine? Does this question even make sense? Computers are vast silicon board fields of binary inputs firing in complex sequence to perform any number of tasks. Can these silicon boards and binary gates have feeling? Everything in our biological umwelt screams no. But then again, we can't feel the earth's magnetic field, or see the rings of Saturn.

I could make an intellectual move here that would be clumsy. I've seen it done. You could point to humans as beings made up of known parts. DNA, cells, tissue, organs, brains and bodies. Humans are machines, no? Wetware instead of hardware. Cells instead NAND gates. If our complex hardware can build consciousness and feelings of experience, why not a machine? In theory, I cannot dispute that it would be possible to design machine consiousness - it is patently possible, my guess is just that it is unlikely.

Here's why. There is key distinction between the evolutionary environment and pressures facing humans vs AIs/computers. Our umwelt evolved in a biological setting, to overcome biological survival problems. Computer AIs have no such environment. Their evolution timeframe is infintessimal, and their feedback mechanism is not nature per se: it is humans. Humans shape computers. For human tasks. For human uses. AIs evolve in the context of recorded human actions and ideas.

What use does a machine have for pain? For pleasure? For a desire to survive, thrive, or propagate for that matter. Computers and AIs react to human inputs, designed objectives. As miraculous as deep learning is, it's deep software, and top researchers explain it in terms of tokens, statistics and prediction.

The umvelt of a machine, as far as I can imagine, is not a concept that makes sense. Biological umwelts were evolved, computers have not had the evolutionary time nor pressure to develop experience, let alone consciousness. As limited as our understanding of consciousness is, we know it is linked to our experience. We have even defined it so this is true: the experience of being someone or something.

Computers/AI models do not have umvelts and experiences and consciousnesses. Do not let the smart folks behind today's human-designed AIs fool you.